The Future of Product Management in an AI-First World

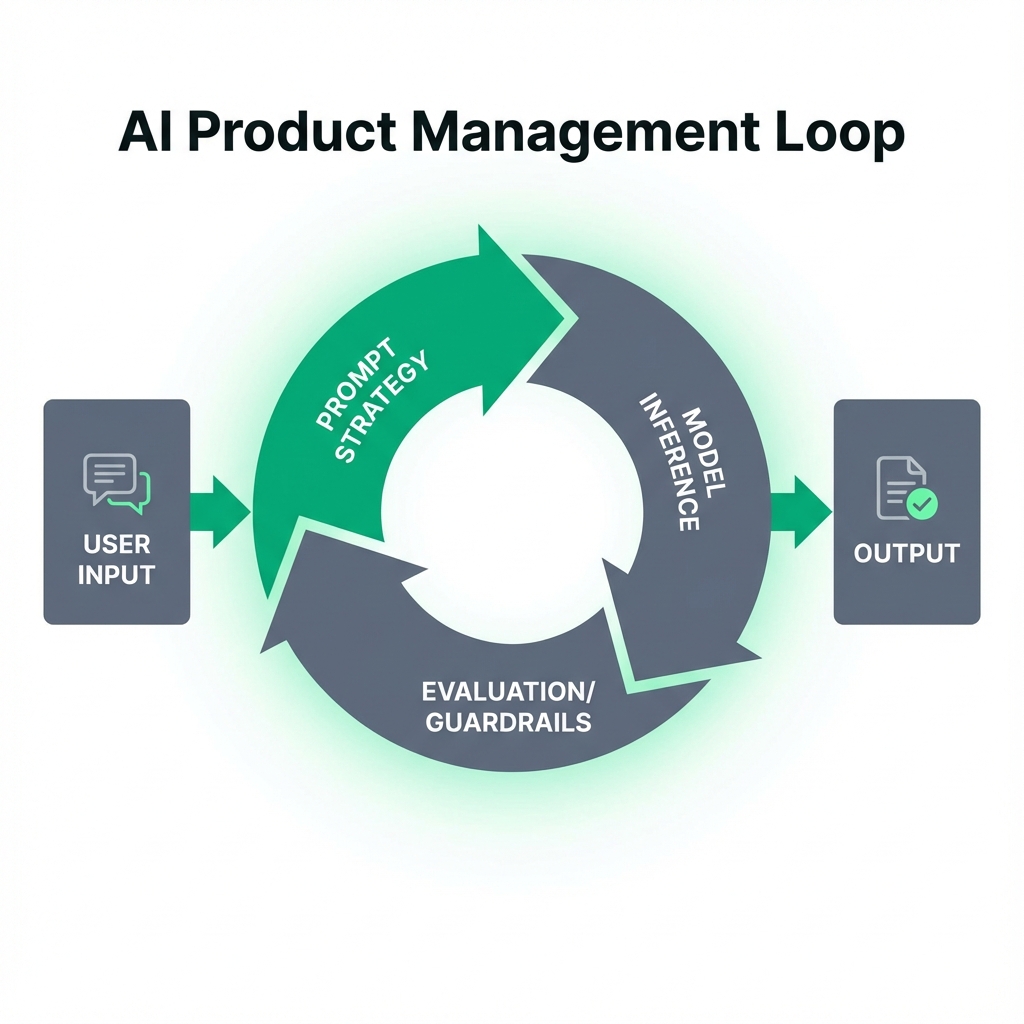

Artificial Intelligence is no longer just a feature; it's the underlying fabric of modern software products. As Product Managers, our role is shifting from defining requirements to defining outcomes and guardrails for AI systems. The traditional PRD is dead; long live the Model Card and the Prompt Strategy.

The Shift from Deterministic to Probabilistic Products

For the last two decades, software product management has been grounded in deterministic logic.If a user clicks button A, then system does B.We write Gherkin syntax(Given - When - Then) to capture this.We build test suites to verify it.We sleep well knowing that the software will behave exactly as we designed it(bugs notwithstanding).

AI products are fundamentally different.They are probabilistic.

The same input might yield different outputs based on temperature settings, model updates, or cosmic rays.When you integrate an LLM, you are introducing a non - deterministic black box into your carefully architected system.This requires a massive shift in mindset for PMs.

The New Definition of "Done"

In a deterministic world, "done" means the feature passes all acceptance criteria.In a probabilistic world, "done" is a statistical confidence interval.A feature is ready when it answers correctly 95 % of the time and fails gracefully the other 5 %.Defining what "graceful failure" looks like is now a core PM responsibility.

Key Differences

- Traditional PM: Defines exact behavior. Focuses on happy paths and edge cases.

Tool: Jira / PRD - AI PM: Defines outcome quality and guardrails. Focuses on prompt evaluation and data curation.

Tool: Eval Evals / Model Cards

The New PM Toolkit: Beyond Jira

To thrive in 2025, Product Managers need to add new tools to their arsenal.Understanding Agile is table stakes.The new competitive advantage comes from understanding the AI stack.

1. Prompt Engineering as Prototyping

The feedback loop for prototyping has collapsed to zero.You don't need a designer to mock up a chat interface or a developer to build a backend. You can open a playground (OpenAI, Anthropic, Gemini) and prototype the logic of your feature in natural language.

Actionable Tip: Don't just write a one-line prompt. Build a "System Prompt" library for your product. Define the persona, the constraints, and the output format. Treat these prompts as code—version control them.

2. Evaluation Frameworks(Evals)

How do you know if your RAG(Retrieval Augmented Generation) pipeline is "good" ? "It feels better" is not a metric.You need quantitative measures.

We are seeing the rise of "LLM-as-a-Judge" frameworks where a stronger model(e.g., GPT - 4) evaluates the output of a faster / cheaper model(e.g., GPT - 3.5) based on criteria like:

- Faithfulness: Is the answer derived solely from the retrieved context?

- Answer Relevance: Does it actually answer the user's question?

- Context Recall: Did we retrieve the right documents?

3. Data Curation as Product Strategy

In the past, data was something we collected to analyze usage.Now, data is the product itself.The quality of your fine - tuning or RAG dataset determines the quality of your user experience.PMs must get their hands dirty with data cleaning, categorization, and synthetic data generation.

Ethical Guardrails and Safety

With great power comes great responsibility.PMs are now the gatekeepers of AI ethics.We must rigorously test for bias, hallucination, and harmful content.It's not enough to build a cool feature; we must assume responsibility for its output.

Consider the "jailbreak" scenarios.Users will try to manipulate your bot.Your prompt strategy must include robust defense layers. OWASP Top 10 for LLMs is mandatory reading.

The "Human-in-the-Loop" Future

The goal isn't always full automation. The most successful AI products in 2025 are "Copilots" that augment human decision-making rather than replacing it. The PM's job is to identify the "handoff points"—where should the AI stop and the human take over ?

For example, in a medical diagnosis tool, the AI might surface anomalies and suggest potential causes, but the doctor must make the final diagnosis. Designing this interaction—the friction, the confirmation steps, the confidence scores—is pure UX/Product strategy.

Conclusion: The conductor, not the coder

The PM of the future is part sociologist, part data scientist, and part user advocate.The core job remains the same—solving customer problems—but the how has changed forever. We are moving from being the "CEO of the Product" to being the "Conductor of the Intelligence."